Numerical evolution of dynamical systems, and Lagrangian mechanics

On using Lagrangian mechanics, and how to use it to simulate complex systems and vehicle dynamics.

The motivation for this article once again comes from the MIT Motorsports team. I have always wanted to model the full transient performance of the car, and I have enjoyed investing time into designing and developing a variety of models for this task.\(\renewcommand{\d}{\mathop{}\!\mathrm{d}}\newcommand{\uvec}[1]{\hat{\mathbf{#1}}}\newcommand{\od}[2]{\frac{\d #1}{\d #2}}\newcommand{\md}[2]{\frac{\text{D} #1}{\text{D} #2}}\newcommand{\odn}[3]{\frac{\d^{#3} #1}{\d #2 ^ {#3}}}\newcommand{\pd}[2]{\frac{\partial #1}{\partial #2}}\newcommand{\br}[1]{\left(#1\right)}\newcommand{\abs}[1]{\left|#1\right|}\newcommand{\super}[1]{\textsuperscript{#1}}\newcommand{\atantwo}[0]{\text{atan2}}

\newcommand{\highlight}[2]{\colorbox{#1}{$\displaystyle#2$}}\)

The previous article showed a method to analyse very generic dynamical systems by automatically identifying degrees of freedom, and then evaluating the dynamics in these degrees of freedom using an extension of D'Alemberts principle. However, I decided to move away from this method for the task of vehicle design for a few reasons:

- The computation in the previous method is too expensive, and simulation can end up being prohibitively slow. The method also has some issues with numerical instability

- It is very difficult to make design decisions from such a complex model. The previous method took all suspension geometry as an input, but I think I am still a ways away from being able to directly optimize the full car's suspension geometry.

To get around these issues, I decided to use a simpler model that models all complexities of suspension e.t.c. as simple springs. This (for now) does not account for progressive motion ratios and other higher level complexities, but still provides quite a comprehensive and easy to tweak model of the full car.

However, I want to emphasize that this article is not an article on vehicle design. This is an article on physics and numerical computation, so I will not go into deep details on how the car model itself works (maybe some other time!). This is an article on Lagrangian mechanics, a powerful tool to analytically evaluate dynamic systems, and how it can be applied in a computational context.

How Lagrangian mechanics works

Lagrangian mechanics is incredibly powerful in dynamics evaluation, described as "the sledgehammer of dynamics". However, a lot of the information on this topic is heavily fragmented and doesn't, in my opinion, give satisfying explanations on how the Euler-Lagrange equations work. In this first section, I will look at the Euler-Lagrange equations and how they can be derived from first principles.

Euler-Lagrange equations

One thing many mechanics students may not know is that the Euler-Lagrange equations are actually a result from variational calculus, and can be derived without a drop of physics. Consider some once-differentiable variable \(q\) and some "time variable" \(t\). \(q\) can be (and usually is a vector) so we will denote it as \(\vec{q}\).

The goal of the calculus of variations in this case in this case is to find some function \(\vec{q}(t)\) that is a stationary point of the following integral:

$$S[q] = \int_{a}^b L(t,\vec{q}(t), \dot{\vec{q}}(t)) \hspace{5px} \text{d}t$$

subject to the condition that \(\vec{q}(a)\) is some constant \(\vec{A}\) and \(\vec{q}(b) = \vec{B}\).

Here, \(L\) is any twice differentiable function on \(t\), \(\vec{q}(t)\) and \(\dot{\vec{q}}(t)\). \(a, b\) are arbitrary constants.

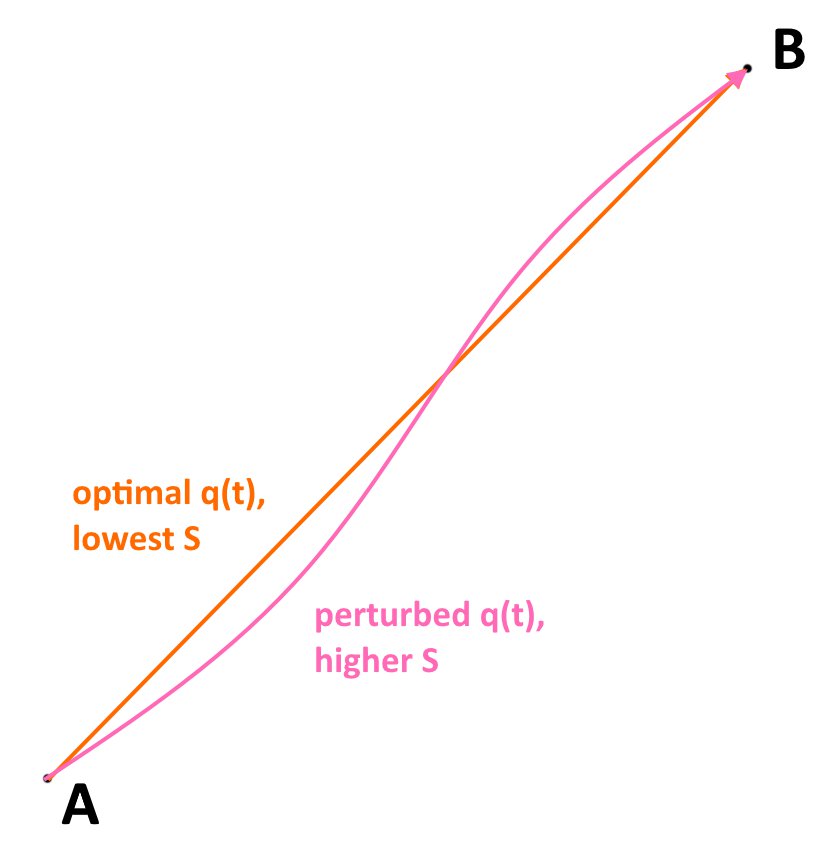

This might seem a bit abstract, so to provide some intuition, you can think of \(\vec{q}(t)\) as being the state of some system over time. The constraints \(\vec{q}(a) = \vec{A}\) and \(\vec{q}(b) = \vec{B}\) means that \(\vec{q}\) starts and ends at some predefined states at times \(a\) and \(b\). You can think of \(L(t, \vec{q}(t), \dot{\vec{q}}(t))\) being the "cost" of the current state and its rate of change at time \(t\), and the goal of the whole problem is to find out the "route" of \(\vec{q}(t)\) between times \(a\) and \(b\) in a way that minimizes the total cost given by \(L\) over the route.

This paradigm of minimizing a cost over a route shows up sometimes in physics. One that many readers may be familiar with is Fermat's principle, which states that light takes the path which minimizes the time taken between two points. (This doesn't immediately work with this formulation, as the "time variable" becomes distance, but you get the general jist)The Euler-Lagrange equations don't allow you to find a global minimum (calculus in general can't do that very well) but they can give some information on a stationary point of \(S\). A "stationary point" here means that if I have some path \(\vec{q}(t)\) and I slightly perturb it while continuing to follow the constraints, \(S\) will not change to the first order. This often means that the path found is the path that minimizes \(S\).

The Euler Lagrange equations are actually derived by following this exact idea numerically. Suppose that \(\vec{q}^*(t)\) is my optimal path. Consider an alternative path given by \(\vec{q}^*(t) + \varepsilon \vec{\eta}(t)\), given the constraints that \(\vec{\eta}(a) = \vec{\eta}(b) = \vec{0}\) to ensure that the new path continues to satisfy \(\vec{q}(a) = \vec{A}\) and \(\vec{q}(b) = \vec{B}\). At a stationary point, no matter what \(\vec{\eta}(t)\) is, we expect

$$\frac{\text{d}}{\text{d}\varepsilon} S[q^* + \varepsilon \eta] = \frac{\text{d}}{\text{d}\varepsilon} \int_{a}^b L(t,\vec{q}^*(t) + \varepsilon \vec{\eta}(t), \dot{\vec{q}^*}(t)) + \varepsilon\dot{\vec{\eta}^*}(t)) \hspace{5px} \text{d}t = 0$$

This means that for small \(\varepsilon\), slightly changing the path by \(\eta\) will, to first order, not change \(S\) i.e. we have found a stationary point of \(S\).

Bring the derivative inside the integral (as the integral is in a different variable) and then differentiate \(L\):

$$

\begin{align}

\frac{\text{d}}{\text{d}\varepsilon} S[q^* + \varepsilon \eta] &= \frac{\text{d}}{\text{d}\varepsilon} \int_{a}^b L(t,\vec{q}^*(t) + \varepsilon \vec{\eta}(t), \dot{\vec{q}^*}(t)) + \varepsilon\dot{\vec{\eta}^*}(t)) \hspace{5px} \text{d}t \\

&= \int_{a}^b \frac{\text{d}}{\text{d}\varepsilon} L(t,\vec{q}^*(t) + \varepsilon \vec{\eta}(t), \dot{\vec{q}^*}(t)) + \varepsilon\dot{\vec{\eta}^*}(t)) \hspace{5px} \text{d}t \\

&= \int_{a}^b \left[ \vec{\eta}(t) \pd{}{\vec{q}} L(t) + \dot{\vec{\eta}}(t) \pd{}{\dot{\vec{q}}} L(t)

\right] \text{d}t

\end{align}

$$

Some comments on the final line:

- I am, somewhat confusingly, using \(L(t)\) to abbreviate \(L(t,\vec{q}^*(t) + \varepsilon \vec{\eta}(t), \dot{\vec{q}^*}(t)) + \varepsilon\dot{\vec{\eta}^*}(t))\)

- \(\pd{}{\vec{q}}\) and \(\pd{}{\dot{\vec{q}}}\) both are taking the derivative of the \(L\) function with respect to its second and third arguments respectively.

To proceed from here, note that the integration by parts can be used on the second term inside the integral:

$$\int_{a}^b \dot{\vec{\eta}}(t) \pd{}{\dot{\vec{q}}} L(t) \text{d}t = \left[\vec{\eta}(t) \pd{}{\dot{\vec{q}}} L(t) \right]^b_{a} - \int_{a}^b \vec{\eta}(t) \frac{\text{d}}{\text{d}t} \left( \pd{}{\dot{\vec{q}}} L(t) \right) \text{d}t$$

However! note that from our assumption that \(\vec{\eta}(a) = \vec{\eta}(b) = \vec{0}\), the first term is zero! We can therefore simplify our earlier expression as follows:

$$\begin{align}

\frac{\text{d}}{\text{d}\varepsilon} S[q^* + \varepsilon \eta] &= \int_{a}^b \left[ \vec{\eta}(t) \pd{}{\vec{q}} L(t) - \vec{\eta}(t) \frac{\text{d}}{\text{d}t} \left( \pd{}{\dot{\vec{q}}} L(t) \right)\right] \text{d}t \\

&= \int_{a}^b \vec{\eta}(t) \left[ \pd{}{\vec{q}} L(t) - \frac{\text{d}}{\text{d}t} \left( \pd{}{\dot{\vec{q}}} L(t) \right)\right] \text{d}t

\end{align}

$$

The final step technically requires more proof as it is the fundamental lemma of the calculus of variations, but it does feel intuitive. As a reminder, our goal is to find a \(\vec{q}(t)\) where, for all valid variations \(\eta(t)\), \(\frac{\text{d}}{\text{d}\varepsilon} S[q^* + \varepsilon \eta] = 0\). Therefore, we have

$$\int_{a}^b \vec{\eta}(t) \left[ \pd{}{\vec{q}} L(t) - \frac{\text{d}}{\text{d}t} \left( \pd{}{\dot{\vec{q}}} L(t) \right)\right] \text{d}t=0$$

As \(\vec{\eta}(t)\) can be almost any function, we can pretty much always pick an \(\eta(t)\) that makes this integral nonzero unless

$$\pd{}{\vec{q}} L(t) - \frac{\text{d}}{\text{d}t} \left( \pd{}{\dot{\vec{q}}} L(t) \right) = 0$$

This is the Euler-Lagrange equation! No physics, no nothing, just finding minimizing paths. You will often see it in the following form:

$$\pd{L}{\vec{q}} - \frac{\text{d}}{\text{d}t} \left( \pd{L}{\dot{\vec{q}}} \right) = 0$$

What does this have to do with physics?

Great question! It is also one that I feel I may not yet be qualified to answer. However, as previously mentioned, one perspective is that the Lagrangian finds the route a system takes that minimizes some cost function. In mechanics, the function \(L\) is the kinetic energy of the system, minus the potential energy of the system, often written as

$$L = T-V$$

This function is then called a Lagrangian. The "total cost over time" \(S\) is then called the action, hence the name "principle of least action". On some intuitive level, you can sort of convince yourself that this makes sense. Imagine you are dropping a ball, and you know that at time \(t=0\) it is in your hand (i.e. \(\vec{q}(0)\) is fixed) and at time \(t=1\) it is on the floor (\(\vec{q}(1)\) is fixed). The state vector \(\vec{q}(t)\) will just represent the height of the ball over time, and you can very simply write the Lagrangian (\(\frac{1}{2}m \dot{q}(t)^2-mgq(t)\)), and use the Euler-Lagrange equations to get the equation of motion for the ball that you would expect. But stepping back to think about it, the path where the ball just linearly accelerates downwards feels like the best "cost minimizing" path. If it somehow spent longer higher up in the air, it would have more time with a higher potential energy, reducing \(L\) and \(S\) in turn, but it would have to move faster to reach the bottom in time, increasing the kinetic energy \(T\).

Nevertheless, I personally can't find myself sold by the whole argument. The Lagrangian is simply unintuitive. I also believe that the idea that "systems somehow "know" where they are in the future and just take the minimal path to get there" to be completely ridiculous; my personal philosophy is that physical laws should apply microscopically to generate macroscopic consequences, not macroscopic constraints dictating microscopic behaviour. Maybe I am too immature in the world of physics and I will have to come back and rewrite this article (and if someone wants to teach me please reach out!), but for now, this idea is not for me.

However, not all is lost. There is a sense in which you can go from fundamental, Newtonian laws of motion, and use those to recover the Euler-Lagrange equations. Following all of the logic backwards, we can use Newtonian mechanics to show the "principle of least action", instead of going the other way.

D'Alembert's principle

Deriving Lagrangian mechanics from D'Alembert's principle actually ends up being more powerful, as it allows you to consider the effects of external forces that do not come from conservative energies.

D'Alembert's principle is the follows: Consider some system made up of \(n\) masses, with masses \(m_{1}, m_{2} \dots m_{n}\) and positions \(\vec{r}_{1}, \vec{r}_{2} \dots \vec{r}_{n}\). Now, suppose that these masses are subject to some holonomic constraints. This means that there are some functions \(c_{1}(\vec{r}_{1}, \vec{r}_{2} \dots \vec{r}_{n}), c_{2}(\vec{r}_{1}, \vec{r}_{2} \dots \vec{r}_{n})\) that are always 0 in any valid configuration of the system. Consider, for example, two points connected by a rigid rod: the distance between the two points will always be a constant length \(l\), and so the function \(c(\vec{r}_{1}, \vec{r}_{2}) = |\vec{r}_{1}- \vec{r}_{2}|^2-l^2\) is always 0.

Next, consider any infinitesimal, kinematically permissible variation in the system. This means we move the points around in the system somehow, but we continue to satisfy the constraints. In our rod example, the two points could move a bit to the left, or rotate them a bit, but they could not get further apart from each other. Then, d'Alembert's principle states that for any variations of this form:

$$\sum_{\text{all particles}} (\vec{F}_{i}-m_{i} \vec{a}_{i}) \cdot \delta \vec{r_{i}} = 0$$

Here:

- \(\vec{F_{i}}\) is the total external force on the \(i\)'th particle. These are forces that are not due to the constraints

- \(\vec{a}_{i}\) is the acceleration of the \(i\)'th particle

- \(\delta \vec{r}_{i}\) is the infinitesimal change in position of the \(i\)'th particle, in the variation that we are considering.

This feels more intuitive! It looks a lot more like Newton's laws, what with the \(F=ma\) type term in there. However, I find that this also is very often not properly proved.

Why does d'Alembert's principle work?

D'Alembert's principle relies on constraint forces always being perpendicular to permissible virtual displacements.

Each particle in the system experiences forces from two sources: the external forces on the system, and the forces due to constraints. For any arbitrary particle (say the \(i\)'th particle), let the total external force on the particle be \(\vec{F_{i}}\) as before, and let the total constraint force be \(\vec{C_{i}}\). Then, we know from Newtonian mechanics that

$$

\vec{F_{i}} + \vec{C_{i}} = m_{i}\vec{a}_{i}

$$

Then, we can rearrange and sum across all particles to obtain

$$

\sum_{\text{all particles}} (\vec{F_{i}} + \vec{C_{i}} - m_{i}\vec{a}_{i}) \cdot \delta \vec{r}_{i} = 0

$$

The statement above is true for any displacement for the particles, and the inclusion of the the \(\delta \vec{r_{i}}\) seems arbitrary. However, if we know that constraint forces are perpendicular to kinematically permissible virtual displacements, then we can *restrict \(\delta \vec{r_{i}}\) to only include kinematically permissible virtual displacements.* Then, by this perpendicular-ity we have

$$

\sum_{\text{all particles}} \vec{C_{i}} \cdot \delta \vec{r_{i}} = 0

$$

And we once again recover d'Alembert's principle:

$$\sum_{\text{all particles}} (\vec{F}_{i}-m_{i} \vec{a}_{i}) \cdot \delta \vec{r_{i}} = 0$$

However, why are constraint forces necessarily perpendicular to permissible virtual displacements? In fact, this is not necessarily true: it is very possible to construct convoluted examples where this doesn't hold (imagine a particle constrained to be on a treadmill, but if the particle is pulled upwards the treadmill moves forwards, for some strange reason). However, constraint forces usually do no work. Imagine a fully rigid link again: it cannot store or dissipate energy, and so in any permissible displacement, the forces it exerts overall do no work, and thus \(\sum_{\text{all particles}} \vec{C_{i}} \cdot \delta \vec{r_{i}} = 0\) as this expression is just the work done by constraint forces.

Using D'Alembert's principle to get Lagrangian mechanics

Okay! We are now ready to do what we set out to do: get Lagrangian mechanics back from d'Alembert's principle, which we just showed was a simple consequence of Newtonian mechanics. I acknowledge Douglas Cline for the derivation in this section, whose work I have rephrased and reformatted, along with writing my own expositions on the steps taken.

To get started, we must first declare a set of generalised coordinates, that can fully and minimally describe the system. In other words, no generalised coordinates can be redundant and it should be possible to perturb any of the generalised coordinates independently of each other. This is our state vector, \(\vec{q}\). For example, in the case of single point moving in a fixed circle, there is only one generalised coordinate, probably the angle traversed by the point.

Using generalised coordinates, we can rewrite d'Alembert's principle, as

$$

\delta \vec{r_{i}} = \sum_{j} \pd{\vec{r_{i}}}{q_{j}} \delta q_{j}

$$

and then d'Alembert's principle becomes

$$\sum_{j} \left( \sum_{i} (\vec{F}_{i}-m_{i} \vec{a}_{i}) \cdot \pd{\vec{r_{i}}}{q_{j}} \right) \delta q_{j} = 0$$

However, from the way we defined generalised coordinates, any perturbation in the generalised coordinates is permissible. This means that we can set any one of the \(\delta q_{j}\) to be nonzero, and set all of the rest to be zero. For d'Alembert's principle above, this means that for all generalised coordinates \(q_{j}\):

$$

\sum_{i} (\vec{F}_{i}-m_{i} \vec{a}_{i}) \cdot \pd{\vec{r_{i}}}{q_{j}} = 0

$$

We begin by removing the external forcing from the system, by defining the generalised force

$$

Q_{j} = \sum_{i} \vec{F}_{i}\cdot \pd{\vec{r_{i}}}{q_{j}}

$$

Sidenote: this is a generalised force in the sense that multiplying this quantity by \(\delta q_{j}\) gives the total work done by that displacement.

This takes d'Alembert's principle to

$$

\sum_{i} \highlight{pink}{m_{i} \vec{a}_{i} \cdot \pd{\vec{r_{i}}}{q_{j}}} = Q_{j}

$$

Now, we prepare for a neat algebraic trick by writing \(\vec{a_{i}}\) as \(\ddot{\vec{r_{i}}}\). I have searched long and hard for some vaguely intuitive explanation of the following steps, but I haven't been very successful (again, if anyone has any insights on this, please contact me!). Anyway, note that

$$

\begin{align}

\highlight{pink}{m_{i}\vec{a}_{i} \cdot \pd{\vec{r_{i}}}{q_{j}}} &= m_{i }\ddot{\vec{r_{i}}} \cdot \pd{\vec{r_{i}}}{q_{j}} \\

&= \od{}{t}\highlight{aqua}{\left( m_{i}\dot{\vec{r_{i}}} \cdot \pd{\vec{r_{i}}}{q_{j}}\right)}

-

\highlight{yellow}{m_{i}\dot{\vec{r_{i}}} \cdot \frac{\text{d}}{\text{d}t} \left(\pd{\vec{r_{i}}}{q_{j}}\right)}

\end{align}

$$

by the product rule.

Now, look more closely at the first term. A not completely unreasonable thing to do is to try to write down \(m_{i} \dot{\vec{r_{i}}} \cdot \pd{\vec{r_{i}}}{q_{j}}\) using only \(\dot{\vec{r_{i}}}\). It turns out that this is completely possible, as note that

$$

\dot{\vec{r_{i}}} = \pd{\vec{r_{i}}}{q_{j}} \frac{\text{d}q_{j}}{\text{d}t} = \pd{\vec{r_{i}}}{q_{j}} \dot{q_{j}}

$$

And therefore

$$\pd{\dot{\vec{r_{i}}}}{\dot{q_{j}}} = \pd{\vec{r_{i}}}{q_{j}}$$

This lets us write

$$

\begin{align}

\highlight{aqua}{m_{i}\dot{\vec{r_{i}}} \cdot \pd{\vec{r_{i}}}{q_{j}}} &= m_{i}\dot{\vec{r_{i}}} \cdot \pd{\dot{\vec{r_{i}}}}{\dot{q_{j}}} \\

&= \pd{}{\dot{q_{j}}}\left(\frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2 \right)

\end{align}

$$

This looks promising! A term has appeared that is just the kinetic energy of the i'th particle!

It keeps getting better, as we turn our attention to the yellow term. Note that

$$\od{}{t} \left(\pd{\vec{r_{i}}}{q_{j}}\right) = \od{}{t} \pd{}{q_{j}}(\vec{r_{i}}) = \pd{}{q_{j}} \od{}{t}(\vec{r_{i}}) = \pd{}{q_{j}} \dot{\vec{r_{i}}} = \pd{\dot{\vec{r_{i}}}}{q_{j}}$$

Now we can write

$$

\highlight{yellow}{m_{i}\dot{\vec{r_{i}}} \cdot \pd{\dot{\vec{r_{i}}}}{q_{j}}} = \pd{}{q_{j}}\left(\frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2 \right)

$$

With the work we have done, we can finally get back to the statement of d'Alembert's principle:

$$

\begin{align}

\sum_{i} \highlight{pink}{m_{i} \vec{a}_{i} \cdot \pd{\vec{r_{i}}}{q_{j}}} &= Q_{j} \\

\sum_{i} \left[ \od{}{t}\highlight{aqua}{\left(

m_{i}\dot{\vec{r_{i}}} \cdot \pd{\vec{r_{i}}}{q_{j}}

\right)}

-

\highlight{yellow}{m_{i}\dot{\vec{r_{i}}} \cdot \frac{\text{d}}{\text{d}t} \left(\pd{\vec{r_{i}}}{q_{j}}\right)} \right] &= Q_{j} \\

\sum_{i} \left[ \od{}{t}\highlight{aqua}{\pd{}{\dot{q_{j}}}\left(\frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2 \right)}

-

\highlight{yellow}{\pd{}{q_{j}}\left(\frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2 \right)} \right] &= Q_{j}

\end{align}

$$

We can now rearrange the summation

$$\od{}{t}\pd{}{\dot{q_{j}}}\left(\sum_{i}\frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2 \right)

-

\pd{}{q_{j}}\left(\sum_{i}\frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2 \right) = Q_{j}$$

But \(\sum_{i} \frac{1}{2} m_{i} \dot{\vec{r_{i}}}^2\) is just the total kinetic energy of all particles! Call this quantity \(T\), and substitute back in:

$$\boxed{\od{}{t}\left[\pd{}{\dot{q_{j}}}(T)\right]

-

\pd{}{q_{j}}(T) = Q_{j}}$$

This should be very exciting! This looks just like the Euler-Lagrange equations, but \(L = T-V\) is replaced with just \(T\). This makes sense! We haven't accounted for forces on particles due to the potential. Let's get to it!

Recall our definition of generalised force:

$$

Q_{j} = \sum_{i} \highlight{lime}{\vec{F}_{i}\cdot \pd{\vec{r_{i}}}{q_{j}}}

$$

However, we can divide \(\vec{F}_{i}\) into the conservative forces \(\vec{F_{i}}^C\) from potential energy, and \(\vec{F_{i}}^{NC}\) from other sources. We also know that the conservative force on the i'th particle is

$$

\vec{F_{i}}^C = -\pd{V}{\vec{r_{i}}}

$$

where \(V\) is the potential energy of the system.

$$

\begin{align}

Q_{j} &= \sum_{i} \highlight{lime}{\vec{F_{i}}\cdot \pd{\vec{r_{i}}}{q_{j}}} \\

&= \sum_{i} \left(\vec{F_{i}}^C +\vec{F_{i}}^{NC}\right)\cdot \pd{\vec{r_{i}}}{q_{j}} \\

&= \sum_{i} \left(-\pd{V}{\vec{r_{i}}} +\vec{F_{i}}^{NC}\right)\cdot \pd{\vec{r_{i}}}{q_{j}} \\

&= \sum_{i} \left( \vec{F_{i}}^{NC}\cdot \pd{\vec{r_{i}}}{q_{j}} \right) -\pd{V}{q_{j}}

\end{align}

$$

We can define a non-conservative generalised force, that encapsulates all forces not from potential fields.

$$

Q_{j}^{NC} = \sum_{i} \vec{F_{i}}^{NC}\cdot \pd{\vec{r_{i}}}{q_{j}}

$$

Now we can write

$$

Q_{j} = Q_{j}^{NC} - \pd{V}{q_{j}}

$$

Substituting this back into the original equation, $$\boxed{\od{}{t}\left[\pd{}{\dot{q_{j}}}(T)\right]

-

\pd{}{q_{j}}(T) = Q_{j}^{NC} - \pd{V}{q_{j}}}$$

We can continue with

$$

\od{}{t}\left[\pd{}{\dot{q_{j}}}(T)\right]

-

\pd{}{q_{j}}(T - V) = Q_{j}^{NC}

$$

Finally, if the potential energy is not dependant on the generalised velocities, \(\pd{}{\dot{q_{j}}}(V) = 0\) and then we can finally finish with

$$

\od{}{t}\left[\pd{}{\dot{q_{j}}}(T - V)\right]

-

\pd{}{q_{j}}(T - V) = Q_{j}^{NC}

$$

But if we define \(L = T-V\), then we have the final finished equation

$$\od{}{t}\left[\pd{L}{\dot{q_{j}}}\right]

-

\pd{L}{q_{j}} = Q_{j}^{NC}$$

This is exactly the same expression as we got from the Euler-Lagrange formulation!

If you remember from earlier, we accomplished we set out to do. Instead of simply defining action and saying that the system follows the route that minimizes the action integral to obtain the above equation, we obtained the above equation from d'Alembert's principle, derived straight from Newtonian mechanics. We can now work backwards and conclude that in the case of no non-conservative forces, the principle of least action holds. This is important as later in physics, assuming the principle of least action is useful, for example in quantum mechanics problems.

However, we have done something even more powerful. We have also proved a framework to work with non-conservative external forces, and this is going to be incredibly important in the next section: using these equations for simulation purposes.

Modelling dynamics using Lagrangian mechanics

To model vehicle dynamics, we need to start with some model of the car. In the interests of keeping our team's competitive edge, I will not be sharing the exact model here. However, for exemplary purposes, I will be showing a simpler model on which this method can be used.

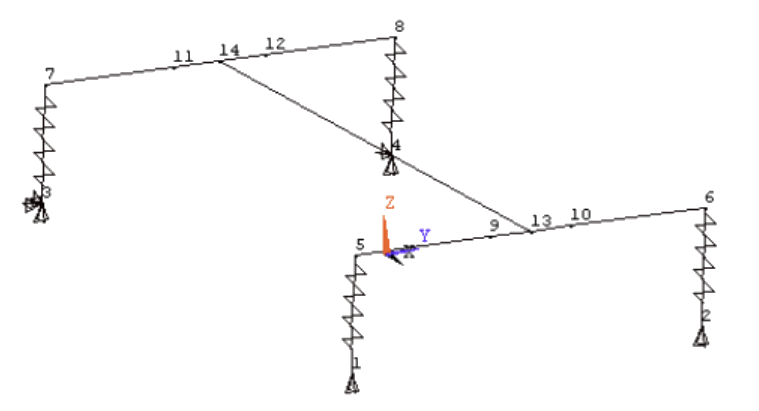

This image depicts a single rigid body for a frame, and four springs representing the suspension. There are now 6 generalised coordinates for the system, describing the position and rotation (I use Euler angles) of the full frame.

However, to write the Lagrangian, we need to write the energy stored in the springs and the kinetic energy of the system in terms of the generalised coordinates. The expressions in this will become absolutely horrible and completely intractable by any human. However, using a symbolic algebra package like SymPy, we can directly write down these equations. This is a mostly uninteresting endeavour, but it can definitely be done! The general strategy for this is to write symbolic expressions for the positions of key tracked points, and then writing expressions for the distances of these points to work out spring lengths and thus energy.

Armed with a symbolic expression of the Lagrangian, we can now generate one equation of motion for each of the generalised coordinates using the Euler-Lagrange equations from before. But how does this allow us to time evolve the system? Let's take a look at the equation from before:

$$\od{}{t}\left[\pd{L}{\dot{q_{j}}}\right]

-

\pd{L}{q_{j}} = Q_{j}^{NC}$$

The first point of action is to deal with the non-conservative generalised forces \(Q_{j}^{NC}\). Recall their definition

$$Q_{j}^{NC} = \sum_{i} \vec{F_{i}}^{NC}\cdot \pd{\vec{r_{i}}}{q_{j}}$$

These forces can represent multiple things in this model, including:

- Aerodynamic forces

- Damper forces

- But most importantly, tire ground forces.

The most efficient way to implement this in the program is to implement this as some set of forces with known direction, but unknown magnitude. Write each non-conservative force as \(c_{k}\hat{\vec{F_{k}}}\), where \(c_{k}\) is the magnitude of the \(k\)'th non-conservative force and \(\hat{\vec{F_{k}}}\) is a unit vector in the direction of the force. \(\hat{\vec{F_{k}}}\) does not have to be constant, but for this formulation to work, we need to be able to write \(\hat{\vec{F_{k}}}\) symbolically using SymPy.

As an example of this formulation, to represent arbitrary tire-ground forces, declare two non-conservative forces for each tire, one for the longitudinal component and one for the lateral component. If one of our generalised coordinates is the yaw of the car (\(\alpha\)), then we can write our two \(\hat{\vec{F_{k}}}\) as \(\hat{\mathbf{i}}\cos \alpha + \hat{\mathbf{j}}\sin \alpha\) and \(-\hat{\mathbf{i}}\sin \alpha + \hat{\mathbf{j}}\cos \alpha\).

Now for each \(\hat{\vec{F_{k}}}\), if \(\vec{r_{k}}\) is the position vector of its point of application, then the contribution of this generalised force to the non-conservative generalised force \(Q_{j}^{NC}\) is \(c_{k} \cdot \left( \hat{\vec{F_{k}}} \cdot \pd{\vec{r_{k}}}{q_{j}}\right)\), and the total generalised force is:

$$Q_{j}^{NC} = \sum_{k} c_{k} \cdot \left( \hat{\vec{F_{k}}} \cdot \pd{\vec{r_{k}}}{q_{j}}\right)$$

We can now write a symbolic expression for each \(\hat{\vec{F_{k}}} \cdot \pd{\vec{r_{k}}}{q_{j}}\), and we can express each \(Q_{j}^{NC}\) as a linear combination of these symbolic expressions. Our equation now looks as follows:

$$\od{}{t}\left[\pd{L}{\dot{q_{j}}}\right]

-

\pd{L}{q_{j}} = \sum_{k} c_{k} \cdot \left( \hat{\vec{F_{k}}} \cdot \pd{\vec{r_{k}}}{q_{j}}\right)$$

It is now time to deal with the left hand side of the expression. Before we proceed though, let's think more about the specific Lagrangian in question for most mechanical systems.

As we established earlier, we can write each \(\vec{r_{i}}\) as some function of the generalised coordinates, \(\vec{r_{i}}(\vec{q})\). We also established that the potential \(V\) was only dependent on the generalised coordinates and not the velocities for the above derivation to work. Note that in cases where working with electromagnetic fields, this may not necessarily be true! However, it is true in our case.

Thus, the Lagrangian is

$$

\begin{align}

L &= T - V \\

&= \sum_{i} \frac{1}{2} m_{i} \left( \od{}{t}(\vec{r_{i}}(\vec{q})) \right)^2 - V(\vec{q}) \\

&= \sum_{i} \frac{1}{2} m_{i} \left(\pd{\vec{r_{i}}}{\vec{q}} \dot{\vec{q}} \right)^2 - V(\vec{q})

\end{align}

$$Denote \(\pd{\vec{r_{i}}}{\vec{q}} = F_{i}(\vec{q})\) The LHS of the equation of motion then becomes

$$

\begin{align}

\od{}{t}\left[\pd{L}{\dot{\vec{q}}}\right] - \pd{L}{\vec{q}} &= \od{}{t}\left[\sum_{i} m_{i} \left(F_{i}(\vec{q})\right)^2 \dot{\vec{q}} \right] - \pd{L}{\vec{q}} \\

&= \left[\sum_{i} m_{i} \left(F_{i}(\vec{q})\right)^2 \ddot{\vec{q}} + \sum_{i} 2m_{i} \left(F_{i}(\vec{q})\right) \dot{\vec{q}}^2 \right] - \pd{L}{\vec{q}}

\end{align}

$$

This equation implicitly puts the equations of motion in a vector, to slightly simplify the notation.

The main point of this is to show that for some functions \(P(\vec{q}, \dot{\vec{q}})\) and \(Q(\vec{q}, \dot{\vec{q}})\), the entire LHS can be written as

$$

\od{}{t}\left[\pd{L}{\dot{\vec{q}}}\right] - \pd{L}{\vec{q}} = P(\vec{q}, \dot{\vec{q}}) \ddot{\vec{q}} + Q(\vec{q}, \dot{\vec{q}})

$$

And for a single equation of motion,

$$

\od{}{t}\left[\pd{L}{q_{j}}\right] - \pd{L}{q_{j}} = P_{j}(\vec{q}, \dot{\vec{q}}) \cdot \ddot{\vec{q}} + Q_{j}(\vec{q}, \dot{\vec{q}})

$$

This seems meaningless, but its consequences are immense. This opens a window into our strategy to time evolve the system: write the motion simply as a first order ODE. If we can somehow compute \(\ddot{\vec{q}}\) from \(\vec{q}, \dot{\vec{q}}\) (or in English, compute "accelerations" of the generalised coordinates given their positions and velocities), then we can put the entire problem into any ODE solver to make progress, as \(\od{}{t}[\vec{q}, \dot{\vec{q}}] = [\dot{\vec{q}}, \ddot{\vec{q}}]\), and the RHS of this expression is now a function of \(\vec{q}, \dot{\vec{q}}\).

So, let's get to it! Each equation of motion is now written as

$$P_{j}(\vec{q}, \dot{\vec{q}}) \cdot \ddot{\vec{q}} + Q_{j}(\vec{q}, \dot{\vec{q}}) = \sum_{k} c_{k} \cdot \left( \hat{\vec{F_{k}}} \cdot \pd{\vec{r_{k}}}{q_{j}}\right)$$

We can continue to use our symbolic algebra program to compute \(P_{j}(\vec{q}, \dot{\vec{q}})\). We do this by computing

$$

P_{j}(\vec{q}, \dot{\vec{q}}) =\pd{}{\ddot{\vec{q}}}\left\{ \od{}{t}\left[\pd{L}{q_{j}}\right] - \pd{L}{q_{j}} \right\}

$$

which can be done once again by using SymPy. Once we have computed all \(P_{j}\), we create a symbolic expression called \(b_{j}(\vec{q}, \dot{\vec{q}})\) containing

$$

b_{j}(\vec{q}, \dot{\vec{q}}) = \sum_{k} c_{k} \cdot \left( \hat{\vec{F_{k}}} \cdot \pd{\vec{r_{k}}}{q_{j}}\right) -Q_{j}(\vec{q}, \dot{\vec{q}})

$$

We can simply do this by substituting all \(\ddot{q_{j}}\) with 0 in the expression for \(\od{}{t}\left[\pd{L}{q_{j}}\right] - \pd{L}{q_{j}}\).

Now each equation of motion is in the form

$$

P_{j}(\vec{q}, \dot{\vec{q}}) \cdot \ddot{\vec{q}}=b_{j}(\vec{q}, \dot{\vec{q}})

$$

To be able to solve for \(\ddot{\vec{q}}\), we need to take all of the equations of motions together. We do this by stacking each of the expressions for \(P_{j}\) into a matrix, \(\mathbf{P_{j}}(\vec{q}, \dot{\vec{q}})\) and all of the expressions for \(b_{j}(\vec{q}, \dot{\vec{q}})\) into a vector \(\vec{b}(\vec{q}, \dot{\vec{q}})\).

This then creates the overall equation

$$

\mathbf{P_{j}}(\vec{q}, \dot{\vec{q}}) \cdot \ddot{\vec{q}}=b_{j}(\vec{q}, \dot{\vec{q}})

$$

But this is now just an equation of the form \(\mathbf{A}\vec{x} = \vec{b}\), where \(\mathbf{A}\) and \(\vec{b}\) are functions of \(\vec{q}, \dot{\vec{q}}\). We can use any linear system solver to find \(\vec{x}\), or in this case \(\ddot{\vec{q}}\).

Now to compute \(\ddot{\vec{q}}\) given numerical values for \(\vec{q}, \dot{\vec{q}}\), we simply substitute \(\vec{q}, \dot{\vec{q}}\) into \(\mathbf{P_{j}}(\vec{q}, \dot{\vec{q}})\) and \(\vec{b}(\vec{q}, \dot{\vec{q}})\) Note that \(\vec{b}\) also depends on the magnitude of the non-conservative forces \(c_{k}\). We can then simply solve the resulting linear system of equations to obtain \(\ddot{\vec{q}}\).

To summarise, here is how the overall time evolution of the system works:

- At each time step:

- Take the current values of \(\vec{q}\) and \(\dot{\vec{q}}\) (the values and first time derivatives of the generalised coordinates)

- Use some external function to compute the magnitudes of all of the non-conservative forces \(c_{k}\) from the current values and time derivatives of the generalised coordinates.

- In the case of the car system, this will involve finding the slip angles of all of the wheels and feeding them into some tire model to calculate tire forces, or finding the aerodynamic force from the velocity of the car.

- Calculate \(\mathbf{P_{j}}(\vec{q}, \dot{\vec{q}})\) and \(\vec{b}(\vec{q}, \dot{\vec{q}})\), and use the above procedure to find the second derivatives of the generalised coordinates, \(\ddot{\vec{q}}\).

- Use a standard ODE solution method (e.g. RK45, but in practice I use SciPy built-ins like solve_ivp) to find the values of \(\vec{q}\) and \(\dot{\vec{q}}\) at the next timestep.

- To elaborate on this, we create the state vector \(\vec{s} = [\vec{q};\dot{\vec{q}}]\). All the steps above allow us to write \(\dot{\vec{s}}\) as a function of \(\vec{s}\) i.e. creating a first order ODE to be solved using an IVP solver.

Closing remarks

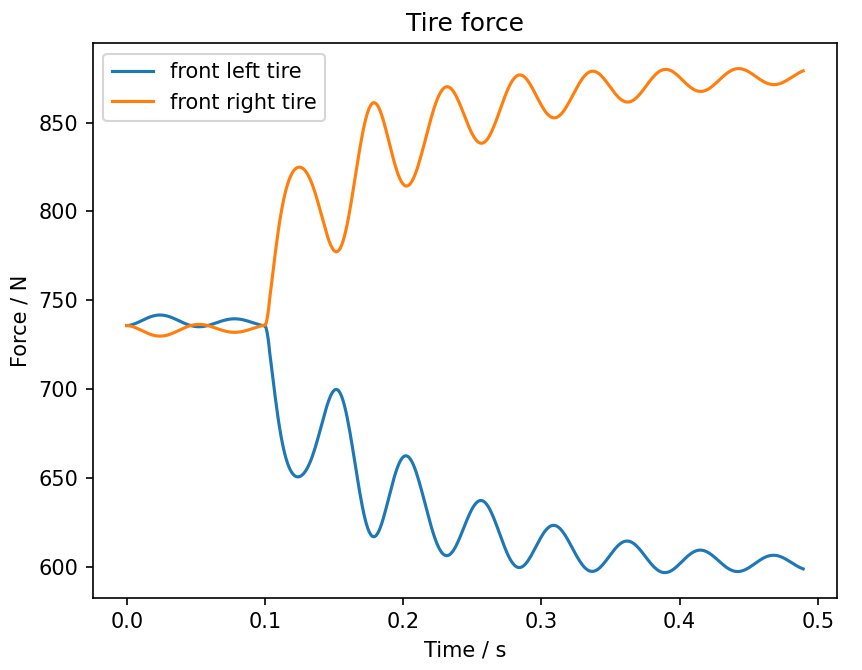

The power of this method is in the sheer complexity that a single model can encapsulate. We no longer have to model cars with simple bicycle models with steady state formulae; we have a generalised method to evolve models as complex as they get. The use of generalised coordinates also allows us to directly track values of interest, such as the yaw rate of the car or the roll of the car. The use of symbolic algebra expressions allow other values of interest (e.g. shock length, tire normal forces) to be easily computed on top of this. To showcase the power of this model, here is a graph showing load transfer as a driver enters a turn following a step steering input:

And here is a video of a simulated car drifting!

Room for improvement

No project is truly done without thinking about where you can go next!

- The simulation speed is quite slow at the moment. Straights run fine, but cornering is up to 10x slower than real time

- This makes lap time simulation quite difficult. I have an entire layer on top of this to somehow precalculate cornering speeds to allow single laps to be run in reasonable amounts of time.

- This could almost definitely be greatly alleviated simply by porting code to C++. At the moment, I am using Numba compilation to speed up function evaluation, but to use SciPy's IVP solver, the data is brought back into Python at each time step for further processing

- It is somewhat difficult to model nonlinear suspension into this model: a lot of suspension is designed with a progressive motion ratio, which cannot be simply modelled in this system

- Time permitting, we will look into potentially constructing new potential functions to account for this.

- Some problems do become harder

- Solving for steady state corners, for example, has proven to be somewhat difficult

- This may be true because steady state corners may not exist due to modelled dissipation. However, it should still be possible to find "close enough" solutions.

- Solving for steady state corners, for example, has proven to be somewhat difficult

- There are kind of "follow-on" problems on how to utilise the power of this model. For example, how would corners best be modelled? It is now a new challenge to find the optimal driver inputs / racing line to simulate for the car when going around a circuit.

But overall, I am super happy with model and its power, and I can't wait to see where the project goes next!